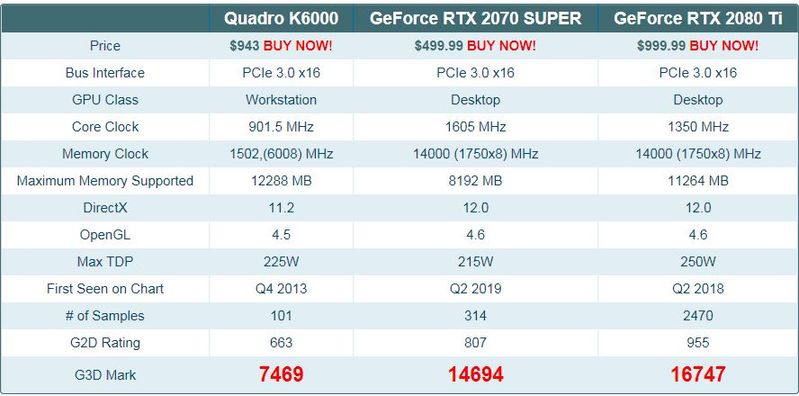

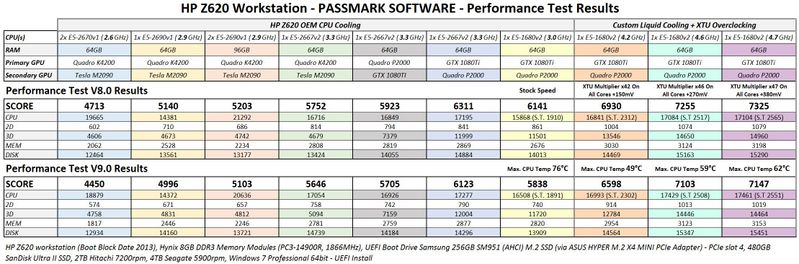

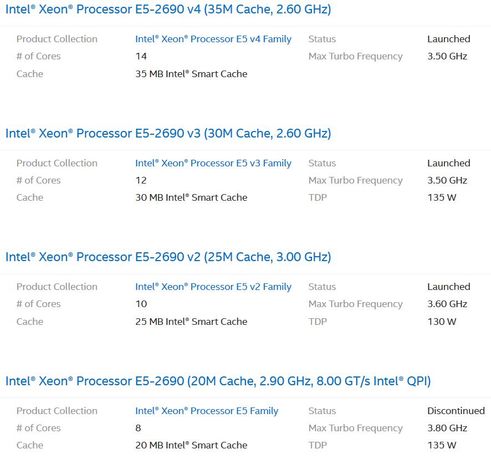

Z820 graphic card upgrade

Options

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

turn on suggested results

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for